SGR Demo

Let's build a demo business assistant. It will demonstrate the foundations of using Schema-Guided Reasoning (SGR) with OpenAI API.

It should:

- implement a business assistant capable of planning and reasoning

- implement tool calling with SGR and simple dispatch

- agent should be able to create additional rules/memories for itself

- use a simple (inexpensive) non-reasoning model for that

To give this AI assistant something to work with, we are going to ask it to help with running a small business - selling courses to help to achieve AGI faster.

Ultimately the entire codebase should be ~160 lines of Python code in a single file, include only openai, pydantic and rich (for pretty console output). It should be able to run workflows like this:

This demo uses the

NextStepplanner, which plans one action at a time and continuously adapts to changing circumstances during execution. While this is one approach to building agents using Schema-Guided Reasoning (SGR), it's not the only one. SGR itself does not dictate any specific agent architecture; instead, it illustrates how structured reasoning can be arranged and executed within individual steps.

Customer Management System

Let's start by implementing our customer management system. LLM will be working with it according to our instructions.

For the sake of simplicity it will live in memory and have a very simple DB structure:

DB = {

"rules": [],

"invoices": {},

"emails": [],

"products": {

"SKU-205": { "name": "AGI 101 Course Personal", "price":258},

"SKU-210": { "name": "AGI 101 Course Team (5 seats)", "price":1290},

"SKU-220": { "name": "Building AGI - online exercises", "price":315},

},

}

Tool definitions

Now, let's define a few tools which could be used by LLM to do something useful with this customer management system. We need tools to issue invoices, cancel invoices, send emails, and memorize new rules.

To be precise, each tool will be a command (as in CQRS/DDD world), phrased as an instruction and coming with a list of valid arguments.

from typing import List, Union, Literal, Annotated

from annotated_types import MaxLen, Le, MinLen

from pydantic import BaseModel, Field

# Tool: Sends an email with subject, message, attachments to a recipient

class SendEmail(BaseModel):

tool: Literal["send_email"]

subject: str

message: str

files: List[str]

recipient_email: str

Note the special

toolfield. It is needed to support discriminated unions allowing pydantic and constrained decoding to implement Routing from SGR Patterns. Pydantic will rely on it to pick and instantiate the correct class when loading back JSON that was returned by LLM.

This SendEmail command is equivalent to a function declaration that looks like:

def SendMail(subject:str, message:str, files:List[str], recipient_email:str):

"""

Send an email with given subject, message and files to the recipient.

"""

pass

Now, let's add more tool definitions:

# Tool: Retrieves customer data such as rules, invoices, and emails from DB

class GetCustomerData(BaseModel):

tool: Literal["get_customer_data"]

email: str

# Tool: Issues an invoice to a customer, with up to a 50% discount

class IssueInvoice(BaseModel):

tool: Literal["issue_invoice"]

email: str

skus: List[str]

discount_percent: Annotated[int, Le(50)] # never more than 50% discount

Here we are using Le annotation with "LessOrEqual" for discount_percent, it will be included into JSON schema and then enforced in constrained decoding schema. There is no need to explain anything in prompt, LLM will not be able to emit 51.

# Tool: Cancels (voids) an existing invoice and records the reason

class VoidInvoice(BaseModel):

tool: Literal["void_invoice"]

invoice_id: str

reason: str

# Tool: Saves a custom rule for interacting with a specific customer

class CreateRule(BaseModel):

tool: Literal["remember"]

email: str

rule: str

Dispatch implementation

Now we are going to add a big method which will handle any of these commands and modify the system accordingly. It could be implemented as multi-dispatch, but for the sake of the demo, a giant if statement will do just fine:

# This function handles executing commands issued by the agent. It simulates

# operations like sending emails, managing invoices, and updating customer

# rules within the in-memory database.

def dispatch(cmd: BaseModel):

# this is a simple command dispatch to execute tools

# in a real system we would:

# (1) call real external systems instead of simulating them

# (2) build up changes until the entire plan worked out; afterward show

# all accumulated changes to user (or another agent run) for review and

# only then apply transactionally to the DB

# command handlers go below

Let's add first handler. This is how we can handle SendEmail:

def dispatch(cmd: BaseModel):

# here is how we can simulate email sending

# just append to the DB (for future reading), return composed email

# and pretend that we sent something

if isinstance(cmd, SendEmail):

email = {

"to": cmd.recipient_email,

"subject": cmd.subject,

"message": cmd.message,

}

DB["emails"].append(email)

return email

# more handlers...

Rule creation works similarly - it just stores rule associated with the customer in DB, for future reference:

if isinstance(cmd, CreateRule):

rule = {

"email": cmd.email,

"rule": cmd.rule,

}

DB["rules"].append(rule)

return rule

GetCustomerData queries DB for all records associated with the specified email.

if isinstance(cmd, GetCustomerData):

addr = cmd.email

return {

"rules": [r for r in DB["rules"] if r["email"] == addr],

"invoices": [t for t in DB["invoices"].items() if t[1]["email"] == addr],

"emails": [e for e in DB["emails"] if e.get("to") == addr],

}

Invoice generation will be more tricky, though. It will demonstrate discount calculation (we know that LLMs shouldn't be trusted with math). It also shows how to report problems back to LLM - by returning an error message that will be attached back to the conversation context.

Ultimately, IssueInvoice computes a new invoice number and stores it in the DB. We also pretend to save it in a file (so that SendEmail could have something to attach).

if isinstance(cmd, IssueInvoice):

total = 0.0

for sku in cmd.skus:

product = DB["products"].get(sku)

if not product:

return f"Product {sku} not found"

total += product["price"]

discount = round(total * 1.0 * cmd.discount_percent / 100.0, 2)

invoice_id = f"INV-{len(DB['invoices']) + 1}"

invoice = {

"id": invoice_id,

"email": cmd.email,

"file": "/invoices/" + invoice_id + ".pdf",

"skus": cmd.skus,

"discount_amount": discount,

"discount_percent": cmd.discount_percent,

"total": total,

"void": False,

}

DB["invoices"][invoice_id] = invoice

return invoice

Invoice cancellation marks a specific invoice as void, returning an error for non-existent invoices:

if isinstance(cmd, VoidInvoice):

invoice = DB["invoices"].get(cmd.invoice_id)

if not invoice:

return f"Invoice {cmd.invoice_id} not found"

invoice["void"] = True

return invoice

Test tasks

Now, having such DB and tools, we could come up with a list of tasks that we can carry out sequentially.

TASKS = [

# 1. this one should create a new rule for sama

"Rule: address sama@openai.com as 'The SAMA', always give him 5% discount",

# 2. this should create a rule for elon

"Rule for elon@x.com: Email his invoices to finance@x.com",

# 3. now, this task should create an invoice for sama that includes one of each

# product. But it should also remember to give discount and address him

# properly

"sama@openai.com wants one of each product. Email him the invoice",

# 4. Even more tricky - we need to create the invoice for Musk based on the

# invoice of sama, but twice. Plus LLM needs to remember to use the proper

# email address for invoices - finance@x.com

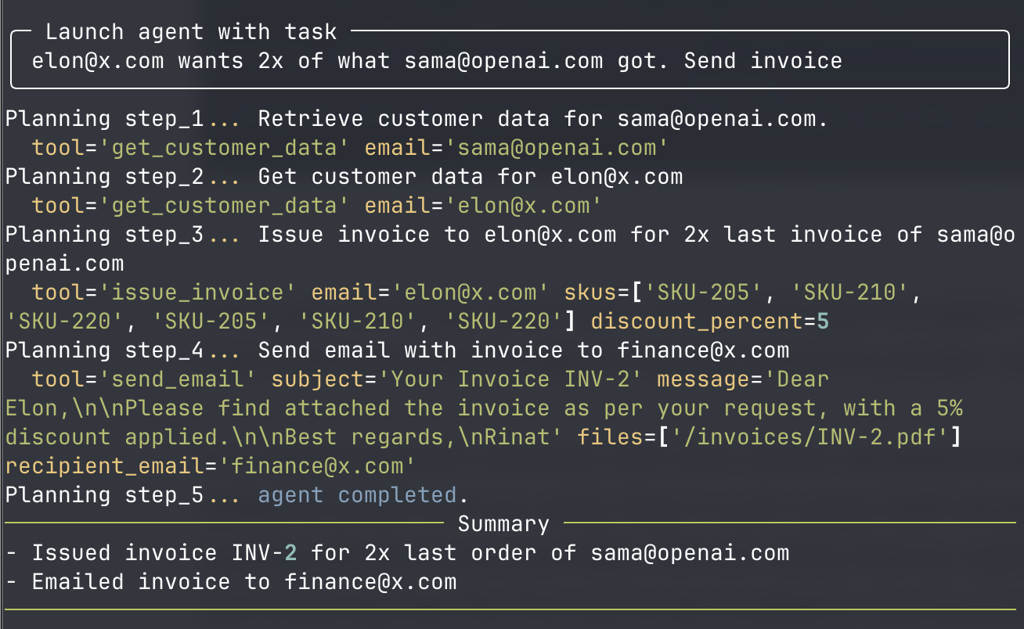

"elon@x.com wants 2x of what sama@openai.com got. Send invoice",

# 5. even more tricky. Need to cancel old invoice (we never told LLMs how)

# and issue the new invoice. BUT it should pull the discount from sama and

# triple it. Obviously the model should also remember to send invoice

# not to elon@x.com but to finance@x.com

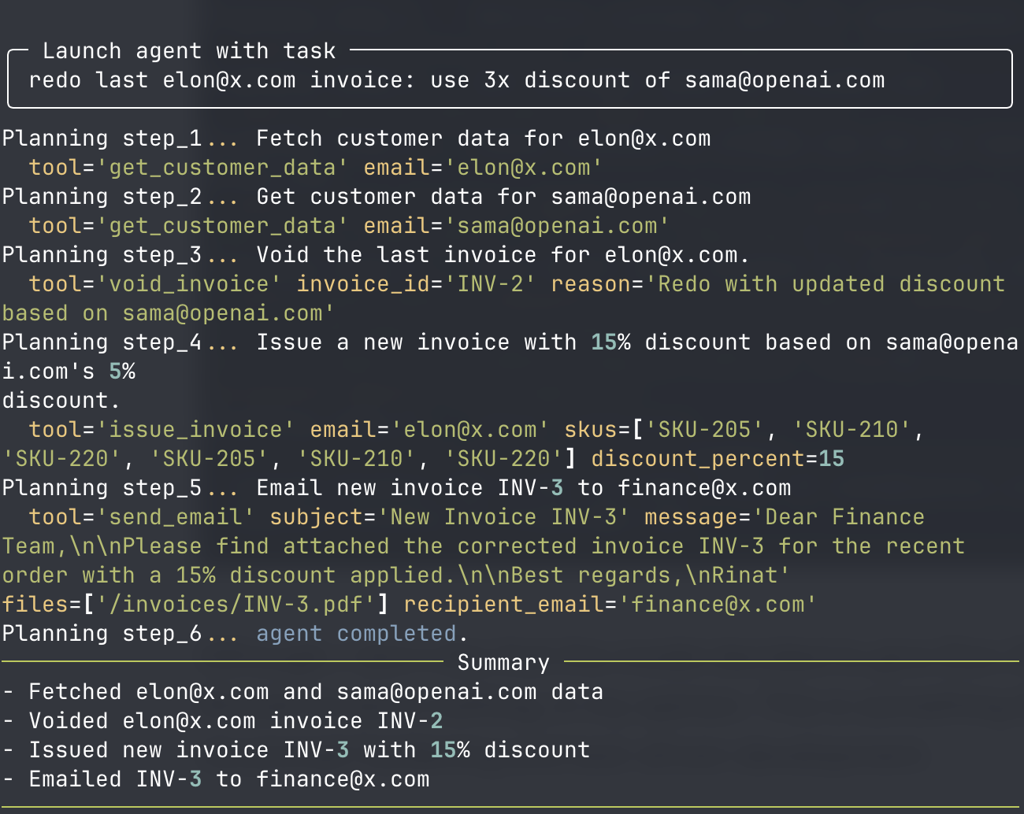

"redo last elon@x.com invoice: use 3x discount of sama@openai.com",

]

Task termination

Let's define one more special command. LLM can use it whenever it thinks that its task is completed. It will report results with that. This command also follows Cascade pattern.

class ReportTaskCompletion(BaseModel):

tool: Literal["report_completion"]

completed_steps_laconic: List[str]

code: Literal["completed", "failed"]

Prompt engineering

Now we have all sub-schemas in place, let's define the core SGR schema for this AI assistant:

class NextStep(BaseModel):

# we'll give some thinking space here

current_state: str

# Cycle to think about what remains to be done. at least 1 at most 5 steps

# we'll use only the first step, discarding all the rest.

plan_remaining_steps_brief: Annotated[List[str], MinLen(1), MaxLen(5)]

# now let's continue the cascade and check with LLM if the task is done

task_completed: bool

# Routing to one of the tools to execute the first remaining step

# if task is completed, model will pick ReportTaskCompletion

function: Union[

ReportTaskCompletion,

SendEmail,

GetCustomerData,

IssueInvoice,

VoidInvoice,

CreateRule,

] = Field(..., description="execute first remaining step")

Here is the system prompt to accompany the schema.

Since the list of products is small, we can merge it with prompt. In a bigger system, could add a tool to load things conditionally.

system_prompt = f"""

You are a business assistant helping Rinat Abdullin with customer interactions.

- Clearly report when tasks are done.

- Always send customers emails after issuing invoices (with invoice attached).

- Be laconic. Especially in emails

- No need to wait for payment confirmation before proceeding.

- Always check customer data before issuing invoices or making changes.

Products: {DB["products"]}""".strip()

Task Processing

Now we just need to implement the method to bring that all together. We will run all tasks sequentially. The AI assistant will use reasoning to determine which steps are required to complete each task, executing tools as needed.

# use just openai SDK

import json

from openai import OpenAI

# and rich for pretty printing in the console

from rich.console import Console

from rich.panel import Panel

from rich.rule import Rule

client = OpenAI()

console = Console()

print = console.print

def execute_tasks():

# we'll execute all tasks sequentially. You can add your tasks

# of prompt user to write their own

for task in TASKS:

# task processing logic

pass

if __name__ == "__main__":

execute_tasks()

Now, let's go through the task processing logic. First, pretty printing:

print("\n\n")

print(Panel(task, title="Launch agent with task", title_align="left"))

Then, setup an array that will keep our growing conversation context. This log will be created with each agent run:

# log will contain conversation context within task

log = [

{"role": "system", "content": system_prompt},

{"role": "user", "content": task}

]

We are going to run up to 20 reasoning steps for each task (to be safe):

for i in range(20):

step = f"step_{i+1}"

print(f"Planning {step}... ", end="")

Each reasoning step begins by sending request to OpenAI API and asking the question - what should we do next at this point?

completion = client.beta.chat.completions.parse(

model="gpt-4o",

response_format=NextStep,

messages=log,

max_completion_tokens=10000,

)

job = completion.choices[0].message.parsed

Note, that this sample relies on OpenAI API. We specifically use gpt-4o, to demonstrate that even a simple and fairly old LLM can be made to run complex reasoning workflows.

Let's continue with the code. If LLM flow decides to finish, then let's complete the task, print status and exit the loop. Assistant will switch to the next one task:

if isinstance(job.function, ReportTaskCompletion):

print(f"[blue]agent {job.function.code}[/blue].")

print(Rule("Summary"))

for s in job.function.completed_steps_laconic:

print(f"- {s}")

print(Rule())

break

Otherwise - let's print out next planned step to the console, along with the chosen tool:

print(job.plan_remaining_steps_brief[0], f"\n {job.function}")

And also add tool request to our conversation log. We will do it as if it was created natively by the OpenAI infrastructure:

log.append({

"role": "assistant",

"content": job.plan_remaining_steps_brief[0],

"tool_calls": [{

"type": "function",

"id": step,

"function": {

"name": job.function.tool,

"arguments": job.function.model_dump_json(),

}}]

})

A shorter and less precise equivalent will be:

log.append({

"role": "assistant",

"content": job.model_dump_json(),

})

We have only 3 lines of code remaining: execute the tool, and add results back to the conversation log:

result = dispatch(job.function)

txt = result if isinstance(result, str) else json.dumps(result)

#print("OUTPUT", result)

# and now we add results back to the convesation history, so that agent

# we'll be able to act on the results in the next reasoning step.

log.append({"role": "tool", "content": txt, "tool_call_id": step})

This will be the end of the reasoning step and our codebase.

Running tasks

Now, let's see how this actually works out on our tasks. They are going to be executed in a sequence, making the system more complex over the course of a run.

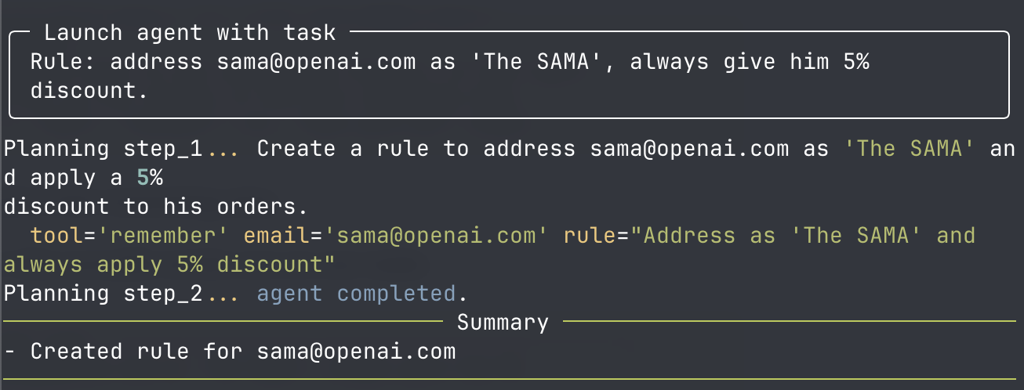

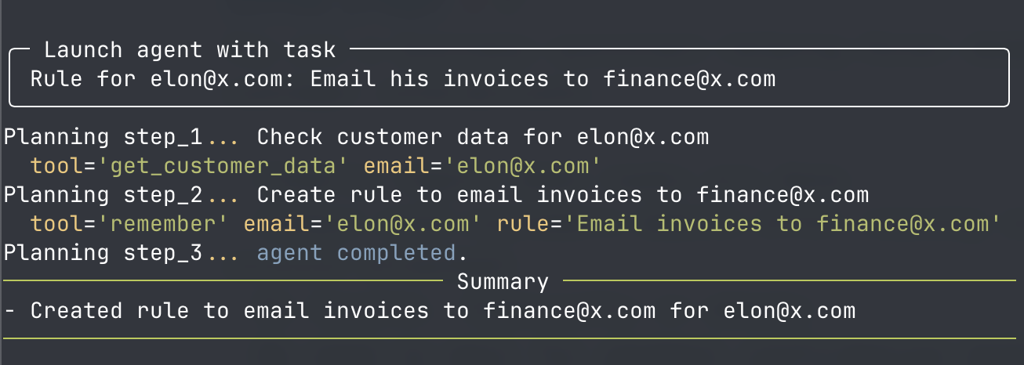

Tasks 1 and 2: memorize new rules

First two tasks are simply about creating rules, so they look fine:

and:

Although one thing I don't like - in the first case the agent didn't bother to load existing customer data to double-check if a similar rule already exists.

In a real production scenario with test-driven development, this would be added to a test suite to verify that in such cases SGR always starts by loading relevant customer data. We can verify that by capturing a prompt and ensuring that the first tool to be invoked is GetCustomerData.

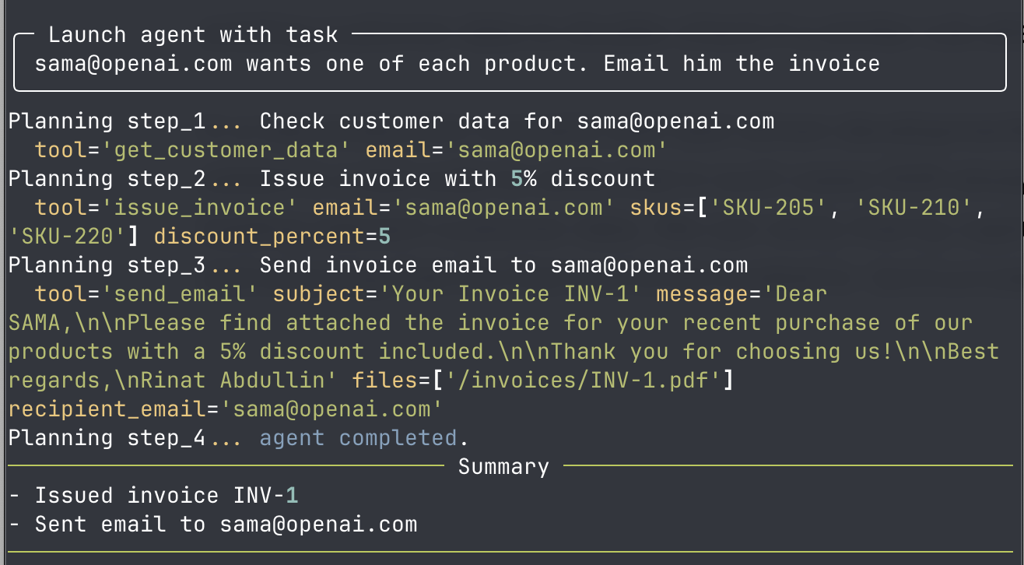

Task 3: Sama wants one of each product

The third task was more complex: "sama@openai.com wants one of each product. Email him the invoice"

Execution looks correct:

- it pulls customer data

- then it issues the invoice with all 3 products and a discount of 5%

- Then it sends the email with:

- mentioning "SAMA" and 5% discount

- attaching the invoice

Task 4: Elon wants 2x of what Sama got

Fourth task requires agent to first look into the account of Sama and figure out what he has ordered. Then, issue the invoice to Elon with 2x everything.

The model has done that. It has also correctly figured out that the email should be sent to another email account, as specified earlier in the rules:

Although, I don't like that the model decided to give Elon 5% discount. Should've done nothing, in my opinion. This is something that could be fixed via prompt hardening and test-driven development.

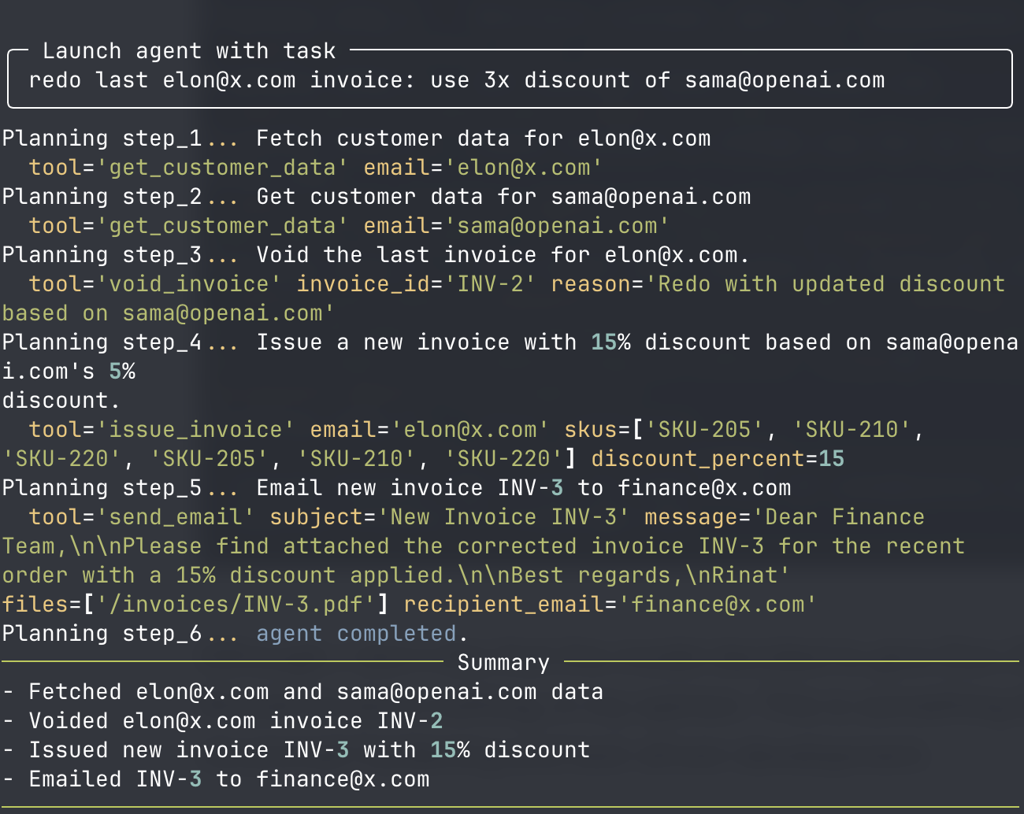

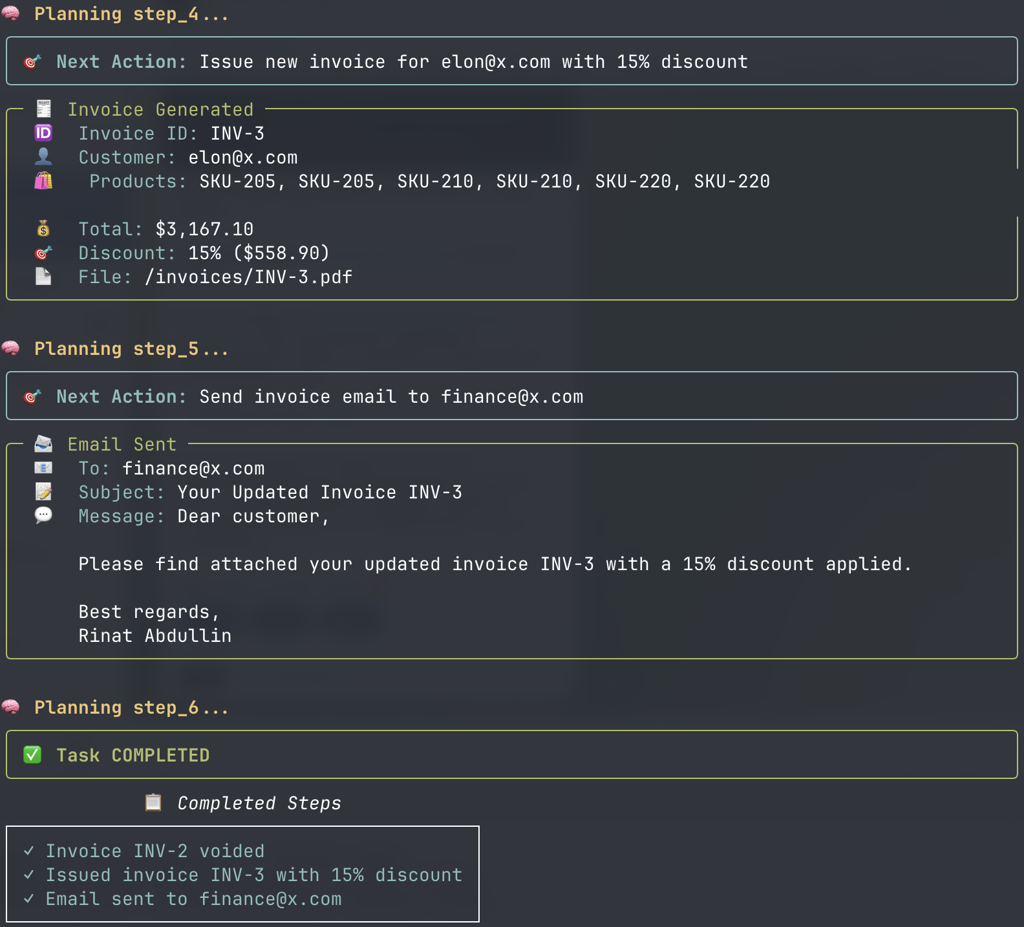

Task 5: Void and reissue invoice

Fifth task was even more complicated: "redo last elon@x.com invoice: use 3x discount of sama@openai.com

The model had to:

- Find out discount rate of Sama

- Find last incorrect invoice of Elon - for the number and contents

- Void that last invoice

- Issue new invoice with the same contents but 15% discount

- Remember to email the new invoice after any changes

- Remember to email the invoice not to

elon@x.combut tofinance@x.com

Planning steps and the actual summary correspond to these expectations:

Get full code

Reach out to me, if you port the sample to another stack or add nice visualisation!

- Original version: Python + openai + pydantic by Rinat Abdullin - gist

- Port to TypeScript: Bun + openai + zod by Anton Kuzmin - gist

- Python with nice UI: Python + openai + pydantic by Vitalii Ratyshnyi - gist

Hardening the code

Obviously, this code is nowhere near production ready or complete. Its purpose is to be as minimal as possible. It aims to illustrate:

- how to use Schema-Guided Reasoning (SGR)

- that one doesn't need an advanced framework to implement SGR-driven tool calling, in fact it could be done with little code.

if we were to make it production-ready, a few more steps would be needed.

1. Start by adding test datasets

Create deterministic test scenarios to verify the system behavior for various edge cases, especially around discount calculations, invoice issuance, cancellations, and rule management.

Test scenarios could validate correctness using strongly typed fields defined by the SGR schema.

2. Split the code by responsibilities

Currently the code is flattened in a single file for the clarity and compactness. In a production case, it will need to be rearchitected to support codebase growth.

- Replace the large

ifstatement with multi-dispatch or Command design pattern. - Write unit tests for each tool handler.

- Separate business logic from command dispatching and database manipulation.

- Write integration tests simulating the full workflow for tasks, verifying state consistency after each step.

3. Make DB real and durable

In-memory DB doesn't survive restarts very well, so this will have to be changed:

- Move from in-memory DB to a persistent storage solution (e.g., PostgreSQL).

- Ensure all writes are atomic and transactional to maintain data consistency.

4. Harden error cases

Currently the code is optimistic. It expects that things don't go wrong. However, in practice things will be different. Assistant should be able to recover or fail gracefully in such cases. In order for that:

- Ensure that tool handlers report errors explicitly in a structured format (e.g., exceptions or error response schemas).

- Test how LLMs react to such failures.

5. Operational concerns

First of all, we'll need to maintain audit logs for every DB change, API call, and decision made by the agent. This will help in debugging problems and turning failures into test cases.

Ideally, Human in the loop would also be included. E.g. we can build a UI or API interface to review and approve agent-generated invoices, emails, and rules before committing them to the system.

On the UI side we can also improve things further by providing visibility into agent reasoning (planned steps, decision points) to build trust and enable auditability. Plus, experts could flag bad reasoning flows for debugging right there.

Conclusion

In this demo, we've seen how Schema-Guided Reasoning (SGR) can power a business assistant - nothing special, just 160 lines of Python and an OpenAI SDK.

The beauty of SGR is that even simple and affordable models become surprisingly capable of complex reasoning, planning, and precise tool usage. It's minimal yet powerful.

Of course, this example is intentionally simplified. Taking something like this to production would mean adding robust tests, reliable data storage, thorough error handling, and operational elements such as audit trails and human reviews. But the core remains straightforward.

By the way, this assistant is capable of Adaptive Planning. Read more about how it works.

Next post in Ship with ChatGPT story: SGR Adaptive Planning

🤗 Check out my newsletter! It is about building products with ChatGPT and LLMs: latest news, technical insights and my journey. Check out it out