ChatGPT quickstart for developers

This concise article will help you to catch up with LLM, ChatGPT and prompting from zero. If you follow this page through, you’ll know how to craft a multi-shot prompt, and what does that actually mean.

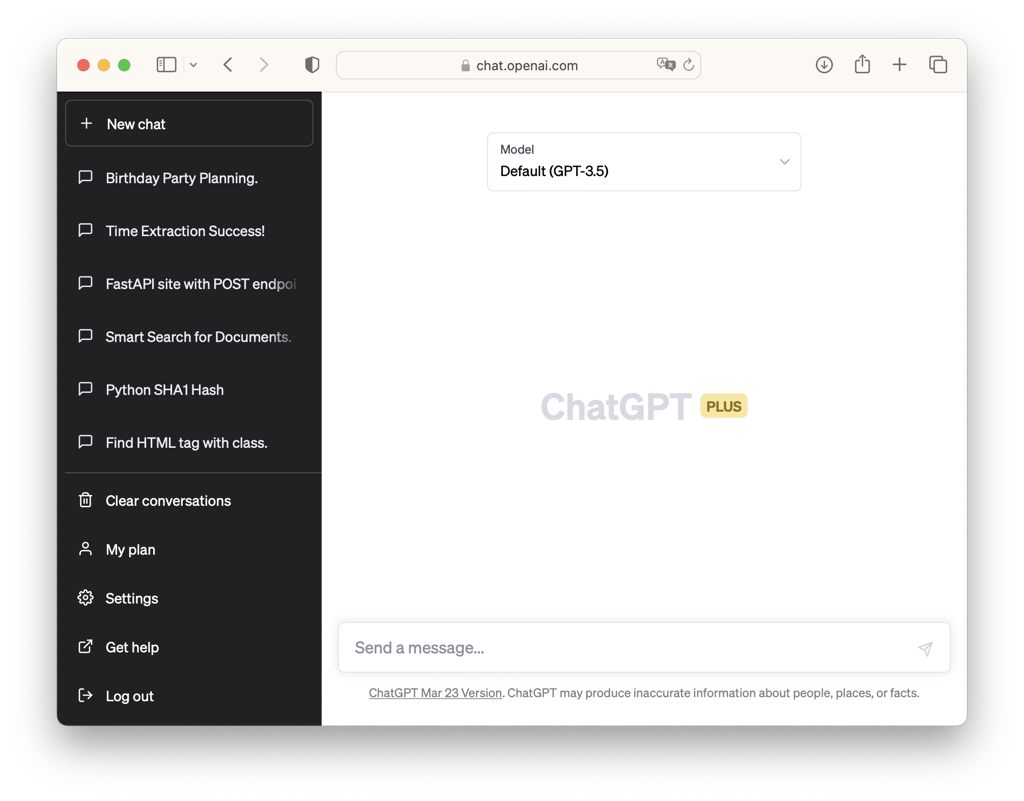

If you have OpenAI ChatGPT account, it will help you to move along.

Let’s start with a few terms.

LLM stands for Large Language Model. Essentially a big fat function that can work with text.

GPT stands for Generative Pre-trained Transformer. Do you have an auto-complete on your phone? GPT is the same thing, but more complex. Given some text, it will generate even more text. GPT is a type of LLM that we are interested in.

ChatGPT is a famous GPT model that was trained by OpenAI. It is called Chat, because it was additionally trained to follow human instructions and make them happy (the process is also called RLHF or Reinforcement Learning Human Feedback).

GPT versions 3.5 and 4 are currently being served by OpenAI. GPT-3.5 is cheap and good for many tasks. GPT-4 is expensive, but more smart.

In ChatGPT (or similar apps) you interact with models by chatting. User requests are also known as “prompts”.

You can ask ChatGPT anything that you want. Go ahead and give it a try. The purpose here is to start building an intuition about how it responds to different queries. Here are a few prompt ideas to start the conversation:

Help me to plan a birthday party for friend. Ask questions as needed.Explain me LLMs like I’m 10yo. Then ask follow-up questions to check my understanding.write me a golang http server that listens on port 8081 and replies with "Hello, friend" to all requests to '/hello'I'm reading a FAQ about LLM and ChatGPT on abdullin.com. Write a tweet about that. Be concise.

Prompts are like text functions written in English. If you can imagine and precisely describe text operation, GPT model will do its best to execute it.

The process of creating prompts that get the job done is called prompt engineering.

Since prompts are like text functions, and software engineers know how to write and test functions, we already know the fundamentals of writing good prompts:

- Learn patterns and practice them

- Test and benchmark prompts

Having that said, please now go ahead and read these two things, they will save you a lot of time:

- Prompt engineering vs blind prompting. This article is written by Mitchell Hashimoto (of Hashicorp and Terraform), it will put prompting into the perspective of software engineering.

- Prompting Guide - it will familiarise you with the techniques of prompting. Read only “Introduction”, “Zero-shot” and “few-shot” prompting. The other content is irrelevant for us now.

After you have read through, you should be able to understand what is exactly going on in this prompt:

You are a smart agent that extracts time of an event from text. Answer concisely:

Q: it is going to rain tomorrow

A: tomorrow

Q: What do you think about afternoon lunch?

A: afternoon

Q: Bus leaves at 12:45 tomorrow from platform 12.

A: 12:45 tomorrow

Q: <USER_INPUT>

A:

To summarise, this prompt:

- is designed to extract time fragment from user input;

- will work in any major human language;

- “trains” model by setting up 3 examples (also known as few-shot or multi-shot prompting).

The last example has a <USER_INPUT> placeholder. When processing user input, we’ll fill it in and pass to ChatGPT to complete. Returned answer would be the output of our function.

GPT prompts are the building blocks for adding ML-driven features to products. Here are a few examples of what is possible:

- Smart chat bot that can answer questions about a company or a product.

- Automation to extract structured information from emails, documents or voice transcripts.

- Software that creates product listings from human descriptions and images.

- Automation to write and deploy a software application based on human description of it.

How do we build something like that? This could require chaining multiple prompts together with some business logic.

The fastest path to achieve that is by using a LangChain - open-source python library that contains a lot of pre-built functionality.

Published: April 20, 2023.

Next post in Ship with ChatGPT story: ChatGPT is unpredictable in text analysis and extraction. Can this be fixed?

🤗 Check out my newsletter! It is about building products with ChatGPT and LLMs: latest news, technical insights and my journey. Check out it out