Schema-Guided Reasoning (SGR)

Schema-Guided Reasoning (SGR) is a technique that guides large language models (LLMs) to produce structured, clear, and predictable outputs by enforcing reasoning through predefined steps. By creating a specific schema (or structured template), you explicitly define:

- What steps the model must go through (preventing skipped or missed reasoning)

- In which order it must reason (ensuring logical flow)

- Where it should explicitly focus attention (improving depth and accuracy)

Instead of allowing free-form text completion (which can be inconsistent or ambiguous), the schema acts as a strict guideline. This guideline will be enforced upon the LLM via Constrained Decoding (Structured Output). You can think of it as giving the model a clear “checklist” or “structured script” to follow.

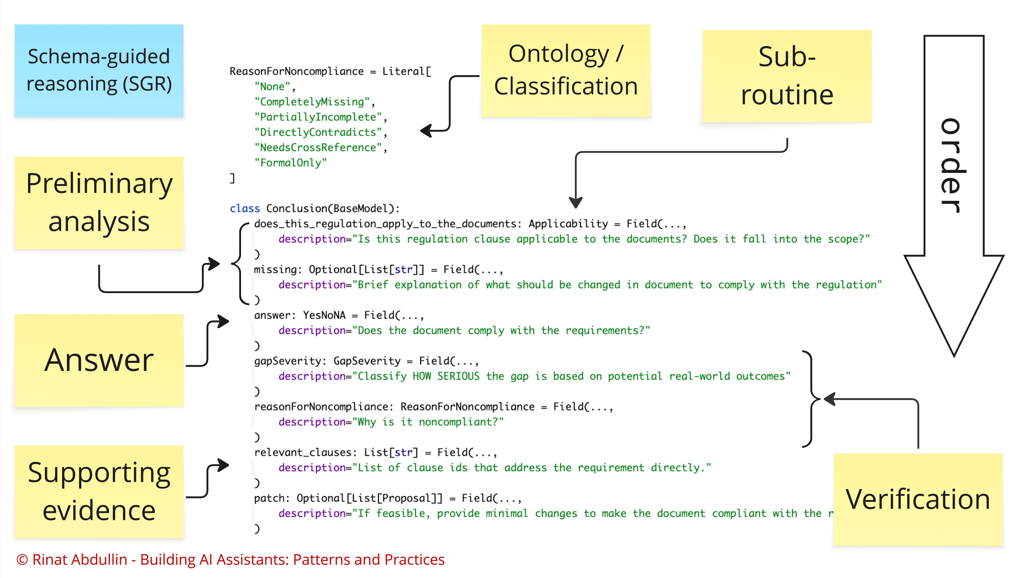

Here is one example of SGR in action from a project in compliance/FinTech domain. This is a pydantic data structure that enforces LLM to perform an analysis of a clause from internal company procedure in a very specific order.

We translated domain expert’s mental checklist into a structured reasoning schema for LLM.

See also SGR Patterns such as Cascade, Routing, and Cycle.

By enforcing strict schema structures, we ensure predictable and auditable reasoning, gain fine-grained control over inference quality, and easily validate intermediate results against test data.

In other words, via the structure we can control the layout of the response. This allows us to break tasks into smaller steps, while ensuring mandatory checkpoints.

Here are some benefits:

- Reproducible reasoning - we guarantee more consistent inference across repeated runs.

- Auditable - SGR makes every reasoning step explicit and inspectable.

- Debuggable & Testable - intermediate outputs can be directly evaluated and improved (they are linkable to test datasets with evals)

- We can translate expert knowledge into executable prompts. DDD works really well here.

- Enhances both reasoning transparency and output reliability. Accuracy boost of 5-10% is not uncommon.

- This improves reasoning capabilities of weaker local models, making them more applicable in various workloads.

Note, that we are not replacing the entire prompt with structured output. We just don't rely only on prompt in order to force LLM to follow a certain reasoning process precisely.

Deep Dive

To dive deeper:

- Read through the SGR Patterns: Cascade, Routing, and Cycle.

- Go through a few SGR Examples that illustrate application of SGR:

- simple math task

- text-to-sql

- document classification

- advanced reasoning in compliance

- Business Assistant demonstrates how to build a reasoning business assistant with tool use in 160 lines of Python.

- Adaptive Planning further explains how and why this simple agent demo is capable of adapting its plans to new circumstances on-the-fly.

Production Uses

Schema-Guided Reasoning (SGR) is the single most widely applied LLM pattern in AI cases that I've observed. It was used:

- in manufacturing, construction - to extract and normalise information from purchase orders, data sheets and invoices in multiple languages (when used together with a Visual LLM);

- in business automation products - to automatically create tickets, issues and calendar entries from the calendar input;

- in EU logistics - to normalise and extract information from diverse tax declaration forms;

- in fintech - to accurately parse regulations for further ingestion into compliance assistants, then - to run compliance gap analysis according to the defined checklist process;

- in sales - to power lead generation systems that run web research powered by custom workflows.

Schema-Guided Reasoning (SGR) becomes even more important for the locally-capable models (models that could run on private servers offline). Such models have much less cognitive capacity than what we could get by querying OpenAI or Anthropic APIs. In other words, local models are generally not as smart as the cloud ones. SGR helps to work around this limitation.

Support

Schema-Guided Reasoning (SGR) works with modern cloud providers that support Structured Output via constrained decoding. It doesn't require reasoning models, but it works well with models that were distilled from the reasoning models.

- OpenAI - supported via Structured Outputs (including OpenAI on Azure). GPT-5 uses JSON Schema via llguidance.

- Mistral - supported via Custom Structured Output

- Google/Gemini - Structured Outputs supported properly since November 2 2025 via JSON Schema (Pydantic and Zod are supported)

- Grok - supported for multiple models: Structured Outputs.

- Fireworks AI - via JSON Schema.

- Cerberas - via Structured Outputs

- OpenRouter - depends on the downstream provider, maps to JSON Schema.

Most of modern inference engines support the necessary capability:

- ollama - via Structured Outputs

- vllm - via xgrammar or guidance backends

- TensorRT-LLM - e.g. via GuidedDecoding

- SGLang - via Outlines, XGrammar or llguidance

Citation

@misc{abdullin2025sgr,

author = {Abdullin, Rinat},

title = {Schema-Guided Reasoning ({SGR})},

year = {2025},

month = jul,

url = {https://abdullin.com/schema-guided-reasoning/},

}

References

- Video with more background on text-to-sql: NODES 2024 - LLM Query Benchmarks: Cypher vs SQL

- Talk by Andrej Karpathy from MSBuild 2023: State of GPT

Next post in Ship with ChatGPT story: SGR Patterns

🤗 Check out my newsletter! It is about building products with ChatGPT and LLMs: latest news, technical insights and my journey. Check out it out