Robotic R&D: FPGA and ML Pipelines

We've made progress in replicating a high-precision robotic arm (from Haddington Dynamics) since last April.

Before moving forward, let me recap the functional components of our robotic joint (with feedback and high precision):

- processor sends controlling impulses to a motor driver;

- motor driver modulates high-voltage electrical current to rotate a stepper motor;

- motor spins a speed reducer gear;

- an optical encoder (a wheel with slits that pass through a pair of IR light beams) translates angular position of a motor into a pair of voltage-modulated signals;

- optical interface board captures voltage levels, drops some noise and feeds it to the analog-to-digital converter from which the processor could read.

In April we had the following goals in mind:

- switch from 3D-printed cycloidal speed reducer to a 3D-printed planetary gear;

- design and print an optical encoder to match the new gear;

- print and solder an optical interface board, replacing a tangling mess of wires on the breadboard;

- switch processing from Arduino Uno to a MiniZed Dev board (ARM+FPGA);

- switch from L293D-based stepper motor driver to A4988.

All these goals are complete now. In addition to that, there is an emerging environment and tooling for moving forward with machine learning in this project.

To clarify a question frequently asked: this isn't a work project, just something we are doing on our free time.

This is a practice in running R&D projects within a new space with a small team, limited budged and even more limited time. All while learning new skills and trying to build something interesting with a smart software that compensates for the hardware shortcomings.

Optical Interface

Initially our optical interface was just a mess of wires, capacitors and resistors on a bread-board.

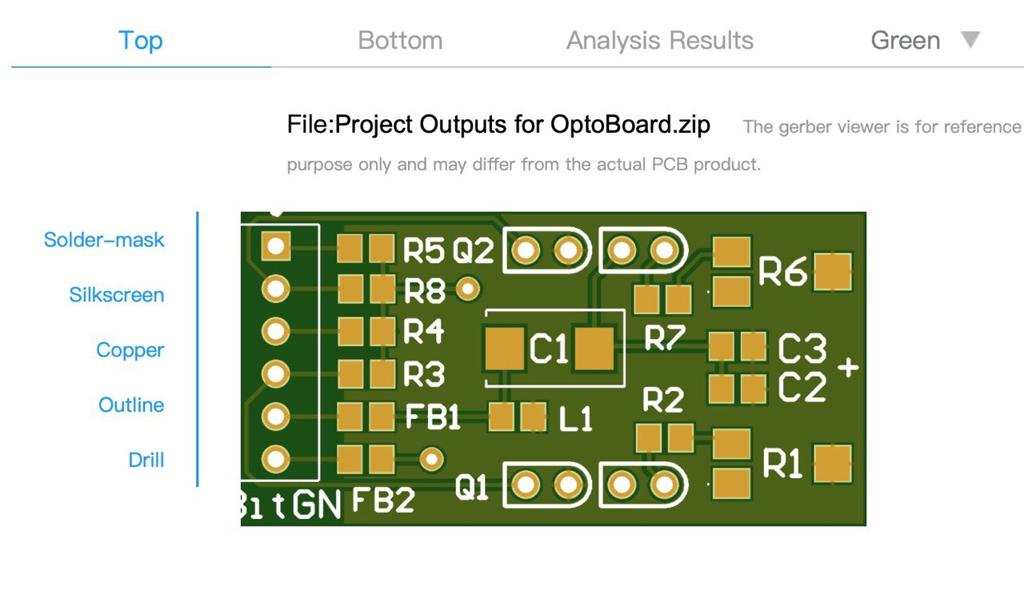

Based on the original schematics from the Haddington Dynamics, I eventually managed to finish the routing and get a PCB design that looked correct.

JLCPCB has a good reputation for printing out circuit boards, so that's where we placed the order: 5 boards and one SMT stencil (helps to apply solder paste).

We could've just reused Gerber files from Haddington Dynamics but this approach would miss a lot of experience, critical for building the intuition, essential for this project.

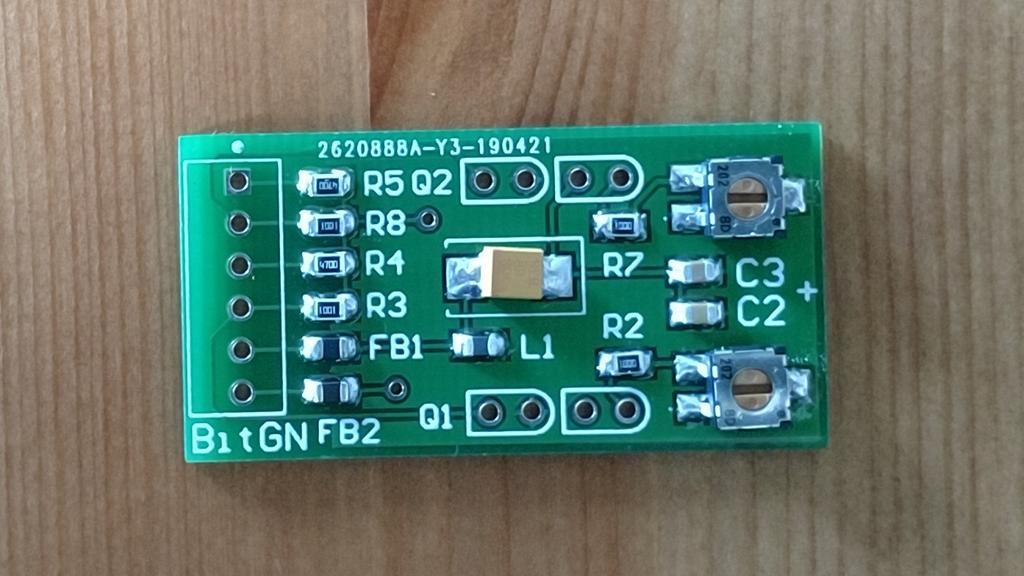

Within the next couple of months we received the necessary parts and "good enough" equipment, then destroyed a practice board while learning SMT soldering with a heat gun.

As it turns out, soldering, per se, is easy. The tricky part is in placing solder paste and sticking little chips without accidentally scattering everything across the table and the floor.

Planetary Speed Reducer

Aigiz printed a planetary speed reducer (from an existing design) that used airsoft balls as bearings.

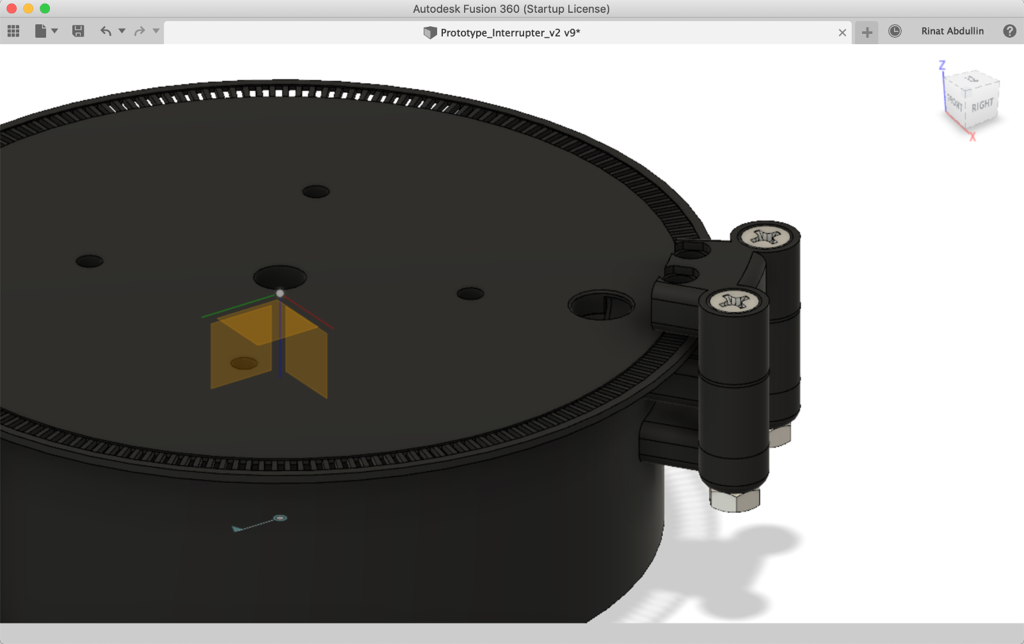

We designed an optical encoder in Fusion 360 to fit this speed reducer. It was modular to allow rapid iterations without reprinting everything all the time.

Modular design paid off quickly. As it turns out, component manufactures don't always stick to precise dimensions, so a lot of fiddling and gluing was necessary.

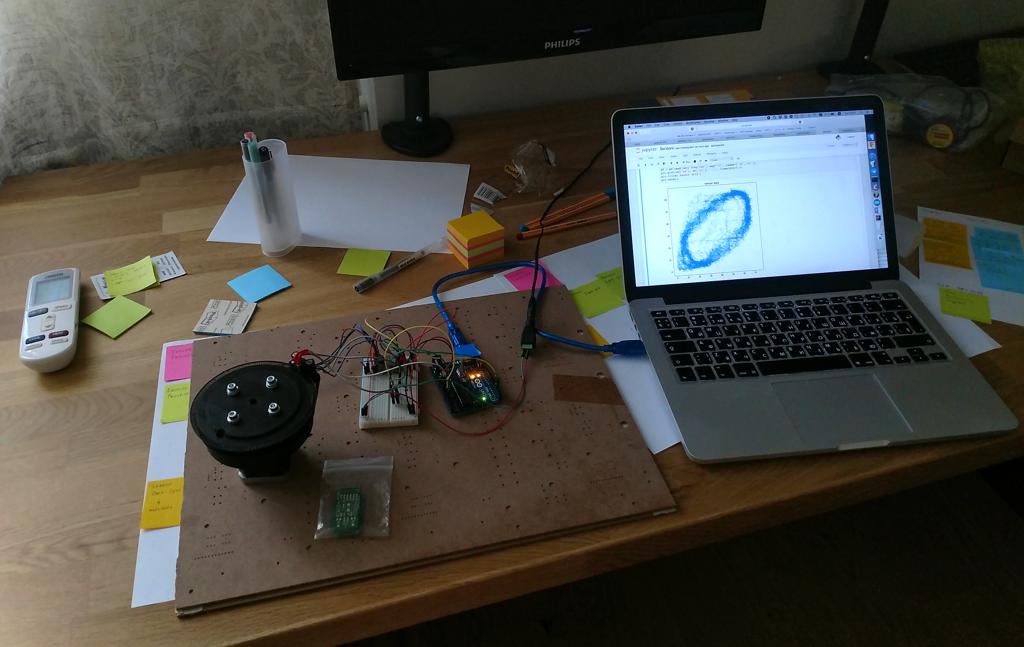

After connecting everything together we got an optical encoder with good repeatability but low precision. Arduino Uno controlled it at this point.

That's when the data started flowing in.

ML Pipelines

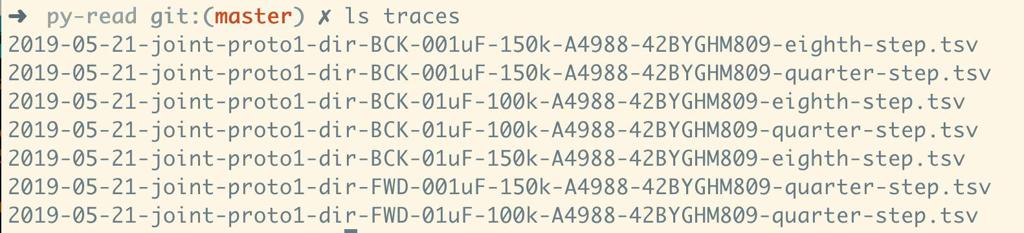

Getting the design to work involved fiddling with different elements: capacitors, resistors and micro-stepping. Somewhere along the way a collection of data samples started getting out of control.

The project is running in small iterations of 30-45 minutes. There isn't much time for "getting into the flow" and any cognitive overhead kills productivity.

At this point, it made sense to pause and invest effort in setting up the necessary software tooling. Just like most software projects, having the right tools could reduce toil and enable steady iteration rythm.

By a coincidence, two peculiar things happened at this time, helping to shape this tooling:

- Zhamak Dehghani published an article on How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh. Among the other things, she explored the notion of domain datasets and collaboration in data-driven organizations.

- I've stumbled upon an incredibly rich lightning talk by Stephen Pimentel on machine learning pipelines at Apple.

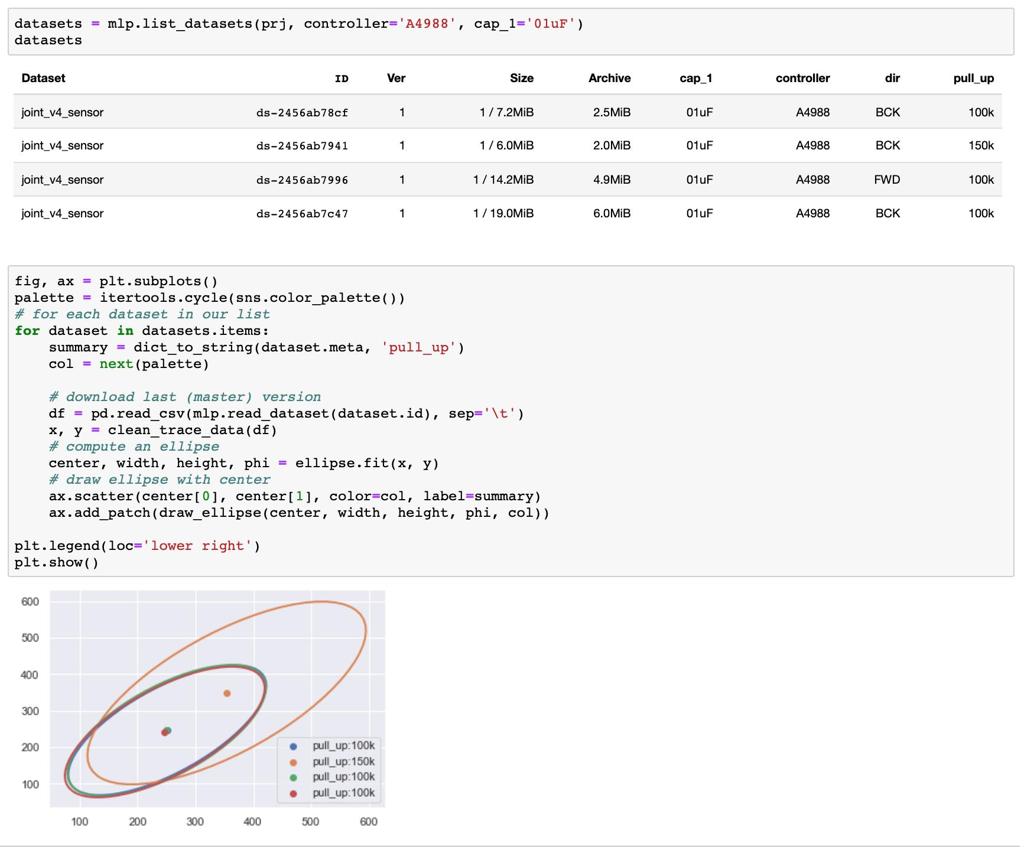

So after throwing a bunch of Post-It notes on the wall, I've hacked together a minimally usable environment for organizing datasets in Jupyter:

As it turns out, Event Storming works nicely with User Story Mapping. Event Storming helps to capture concepts, transitions and abstract details within the business logic. User Story Mapping helps to organize and prioritize potential features, while aligning them with the bigger picture.

The biggest benefit was in enabling rapid and focused iterations (as in 2-4 hours per iteration) while deferring everything "not-so-important" to a later stage.

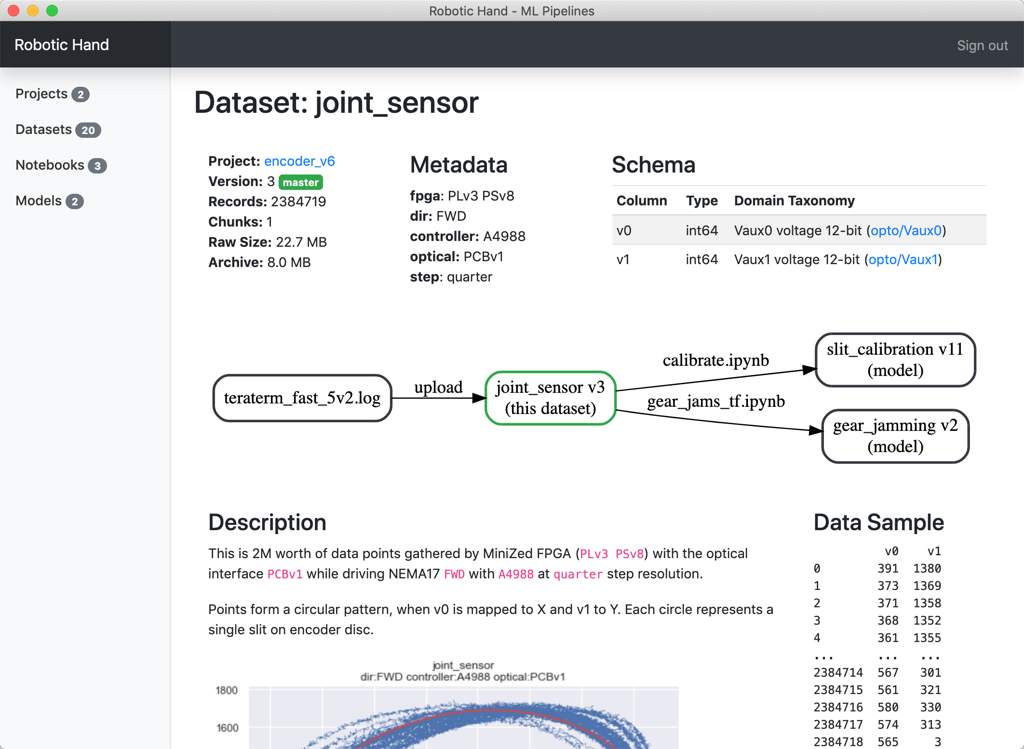

This environment stores and structures datasets, models and notebooks in an opinionated way, associating metadata with every bit of information and state change. This makes dependencies and relations explicit, helping with versioning, documentation and collaboration.

MiniZed FPGA

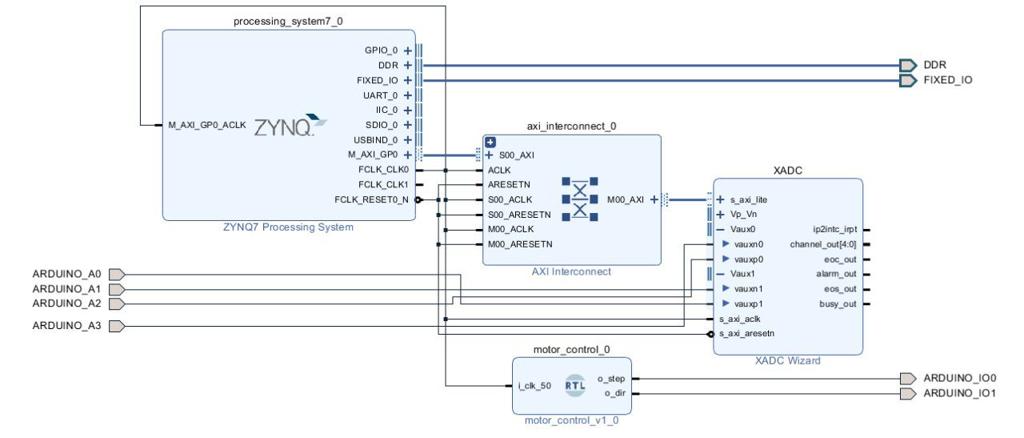

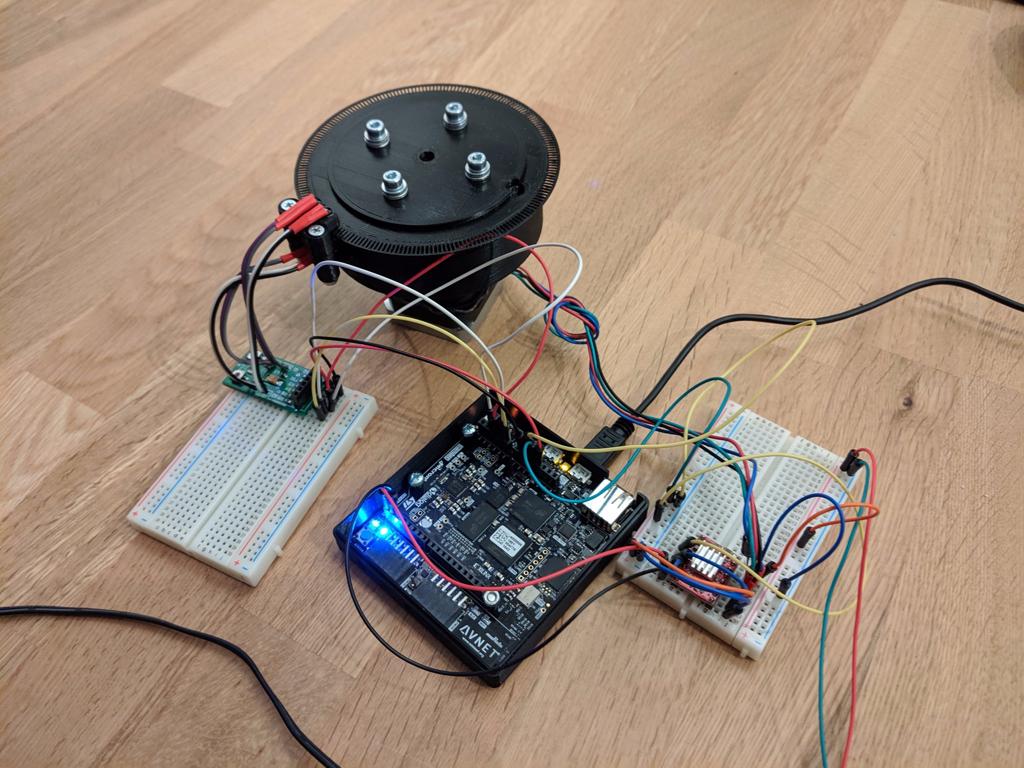

Somewhere along the way, a MiniZed FPGA finally arrived. It took some time to make sense of the toolchain and board details. Eventually (after multiple failed attempts and banging of my head on the desk), ARM Cortex-A9, Artix-7 Programmable logic, Xilinx Analog-to-Digital converter and our own mess of wires started working together (in a naive way).

Here is how our design looks at this stage:

This upgrade allowed us to replace Arduino Uno, while increasing sampling precision (11 bits) and frequency (10k-100k samples per second).

Next Steps

For the next month, I'll be traveling with my family, away from the hardware. I will make advantage of it by focusing on the software:

- migrate ML Pipelines environment to event-sourced design (to evolve design without worrying about data schema upgrades);

- explore programmable logic in a simulated environment for absolute positioning (with Verilator);

- explore models for compensating jitter in the speed reducer (perhaps, even give a try to hashed perceptron in PL).

Meanwhile in Ufa, Aigiz will be working on a new design of the speed reducer gear.

Published: July 06, 2019.

Next post in Robotics story: M1, Viva and alien tech

🤗 Check out my newsletter! It is about building products with ChatGPT and LLMs: latest news, technical insights and my journey. Check out it out