Structured Output

Summary: Structured Output (constrained decoding based on grammar) forces LLM to respond only according to a predefined schema.

Structured Output was popularised by OpenAI, but since then found its way to multiple cloud providers and local inference engines.

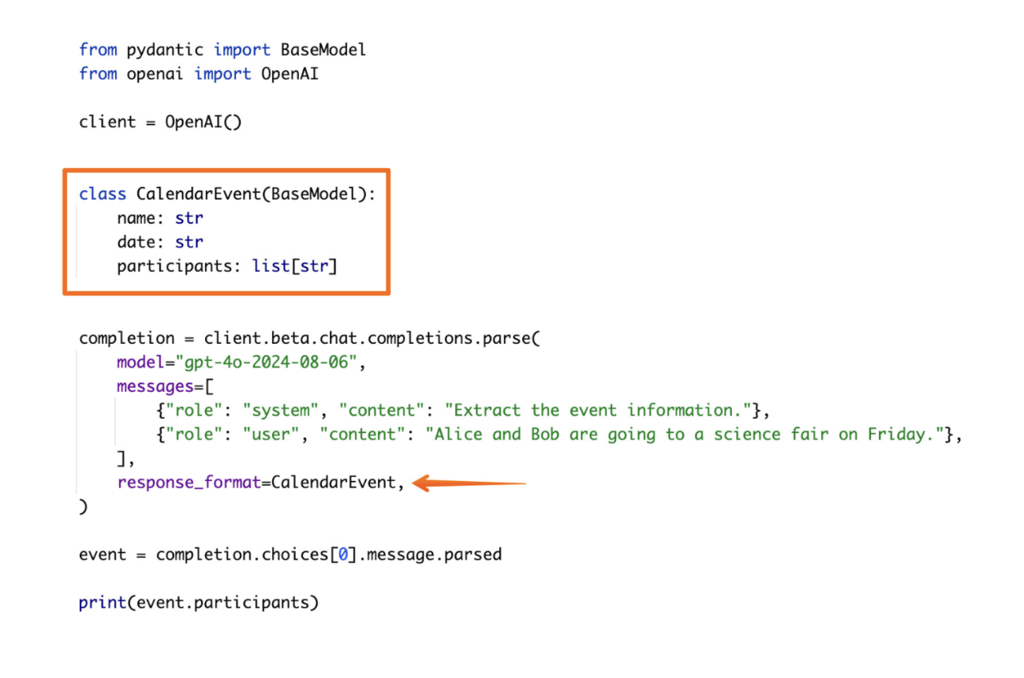

The best way to illustrate the concept is with a code snippet.

Let's say, we want to parse chat messages and extract calendar event data out of them.

One way to approach that is by prompting LLM to respond in a specific format. Then, parsing the response with regular expressions to extract required fields.

Another approach is to use response schema which will ensure that the output will be structured in a certain way. Like this:

Response follows

Response follows CalendarEvent schema, so we can parse and manipulate is as a type object right away. This saves a lot of development time.

The code above prints the list of parsed participants. It will print ['Alice', 'Bob']

When using Python, you can leverage different types of properties to constrain the response:

from pydantic import BaseModel

from typing import Literal, List

class SqlResponse(BaseModel):

sql_query: str

query_type: Literal["read", "write", "delete", "update"]

tables: List[str]

class ComponentResponse(BaseModel):

height_mm: float

width_mm: float

depth_mm: float

number_of_pins: int

component_type: Literal["AC/DC", "DC/DC"]

Different languages will make use of various typing frameworks. Implementation-wise, under the hood everything will most likely be converted to JSON Schema before being passed to LLM inference engine.

Structured output is an essential tool for improving LLM accuracy via Schema-Guided Reasoning (SGR)

How does this work?

Under the hood Structured Output works like a regex for the token generation. LLMs generate probabilities for all tokens at each single token, and constrained decoding simply prohibits certain tokens from happening.

We can illustrate this with a simple snippet. The code below prompts a local model (Mistral 7B in this case): "Write me a mayonnaise recipe. Please answer in Georgian".

By default Mistral 7B is a small model that will not be capable of answering in a lesser-known language, but this specific code will work:

from transformers import AutoModelForCausalLM, AutoTokenizer, LogitsProcessor

import torch

model = AutoModelForCausalLM.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2",torch_dtype=torch.float16, device_map="auto")

tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2")

# MAGIC HAPPENS HERE

messages = [

{"role": "user", "content": "Write me a mayonnaise recipe. Please answer in Georgian"},

]

tokens = tokenizer.apply_chat_template(messages, return_tensors="pt").to(device)

generated_ids = model.generate(

tokens, max_new_tokens=1000, do_sample=True, num_beams=5,

renormalize_logits=True, logits_processor=[Guidance()])

decoded = tokenizer.batch_decode(generated_ids)

print(decoded[0])

The reason for that is a small class called Guidance which we pass to logit_processor field. This class makes it impossible for LLM to answer in anything but Georgian:

import regex

alphabet = re.compile(r'[\u10A0-\u10FF]+')

punctuation = regex.compile(r'^\P{L}+$')

drop_mask = torch.zeros(1, tokenizer.vocab_size, dtype=torch.bool, device="cuda:0")

for k, v in tokenizer.get_vocab().items():

s = k.lstrip('▁')

if alphabet.match(s) or punctuation.match(s):

continue

drop_mask[0][v]=True

drop_mask[0][tokenizer.eos_token_id]=False

class Guidance(LogitsProcessor):

def __call__(self, input_ids, scores):

return scores.masked_fill(drop_mask, float('-inf'))

Code will work as expected, but will come with a caveat: Mistral 7B will indeed answer only with Georgian letters but would sometimes respond in a complete gibberish.

This highlights the major caveat with Structured Output - it forces the model to respond only in a very predefined format, but:

- this doesn't magically distill the model with corresponding skills

- this can actually reduce model accuracy, because we constrain not only response but also thinking process.

We can leverage Schema-Guided Reasoning (SGR) to use Structured Output while improving accuracy.

Caveats

Caveat: Description Fields

Some LLM APIs will use the response schema twice:

- To compile and load into the inference engine

- To silently insert into a prompt

Because of this, the following structured request will work as expected on OpenAI:

class ResponseFormat(BaseModel):

say_hi_like_a_royal_person_briefly: str = Field(..., description="Respond in German!")

it will respond in German:

{

"say_hi_like_a_royal_person_briefly": "Guten Tag, ich grüße Sie hochachtungsvoll!"

}

However, not all APIs and inference engines do that. You can use the ResponseFormat above to test this assumption.

Caveat: Accuracy

It is very easy to reduce accuracy of a model by introducing constrained decoding. This happens, because we take the ability of a model to think before providing an answer. Schema-Guided Reasoning (SGR) could help to mitigate the problem.

Implementations

- Structured Outputs by OpenAI

- Mistral Structured Outputs

- Structured Outputs with Google Gemini

- Local: XGrammar

- Local: Outlines

Next post in Ship with ChatGPT story: Shipping products with LLMs and ChatGPT

🤗 Check out my newsletter! It is about building products with ChatGPT and LLMs: latest news, technical insights and my journey. Check out it out