(Over) Designing privacy-first analytics

While getting rid of Google Analytics and setting up Lean web analytics for my website, I hit a problem. It is a balance between privacy and gathering feedback.

My current setup uses standard web access logs. These include IP addresses and timestamps. That is enough to track individual user actions on the website, regardless of the ad blocker.

However, I don't want to record IP addresses or use session cookies, since this involves personal information (IP) and cookie consent.

At the same time, I want to see:

- which pages are interesting for people - to get the motivation to write more.

- how do people arrive at a specific page and where do they go next - to improve information structure.

I don't want to spy on specific people, just to have aggregate feedback.

So what if we change common web analytics this way?

- don't persist IP addresses, not even after hashing;

- don't use any cookies, fingerprints, or identifiers;

- On every page request from the website, send two additional headers:

X-Page-Num: is it the first page visited on this site, 2nd or Nth?X-Page-Sec: how much time was spent on the previous page (rounded to seconds)?

Server-side, append this information to a daily table. For example:

{

"date": "2022-06-13", // daily bucket

"referer": "/lean-web-analytics", // HTTP Referer header

"url": "/privacy-first-analytics", // Requested url

"visits": {0: 12, 1: 2, 2: 1}, // number of visits, by page num

"time": [1: 2, 2:10, 3:1, 6:1, 12:1] // seconds spent on a page

]

Page visits from multiple users (and bots) traveling through the website get aggregated. Without a way to link visits to a session or IP address (because we aren't storing them), it is impossible to figure out who did what.

How can we deduce any statistics from that?

- The number of browser sessions per day? Total count of visits with page num

0 - Average time spent on a page per day? Group all rows by URL, then average

time. - Where do people go from page

X? Find all rows, wherereferer==Xand weight by the number ofvisits - How do people come to page

X? Find all rows whereurl==X - How many people do leave the site after page

X? The number of visits to pageXminus number of visits fromXis the number of leaves.

Gotchas

What we can't deduce from the statistics?

- The number of unique visitors. We can only count unique sessions.

- Which sequence of pages leads to page

X. We know only pages that lead directly to pageX, but we can't reconstruct the entire flow. So we can't exactly track the sales funnel.

Can ad blockers prevent this setup from working? Only by disabling JavaScript on the website or messing up with the headers.

Can this setup be misused? Yes, by recording IP addresses and timestamps, like it is done in web server access logs.

How to implement this setup?

Here are just a few ideas.

First, add Hotwire Turbo (or equivalent) to the website, then add an event listener to include page number and time:

var page_num = 1;

var page_time = Date.now();

(function () {

addEventListener('turbo:before-fetch-request', async (event) => {

const headers = event.detail.fetchOptions.headers;

headers['X-Page-Num'] = page_num;

headers['X-Page-Sec'] = Math.round(Date.now()-page_time);

page_time = Date.now();

page_num ++;

})

})()

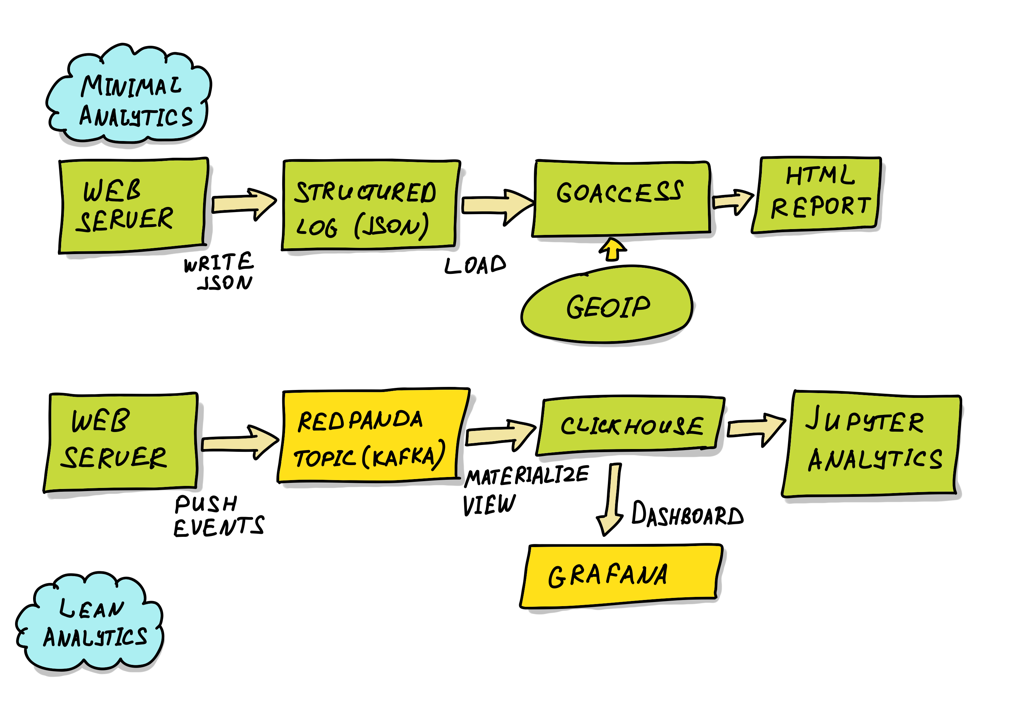

Server-side, drop standard web access logs. Replace them with custom logs that don't persist IP addresses or timestamps. Push these as updates to a database (e.g. Clickhouse). Don't record individual events - aggregate them immediately.

If using a message bus between the web server and Clickhouse, set a short retention period (e.g. a day), so that individual analytics messages don't live long. RedPanda might be a good fit (resource-efficient, Kafka-compatible and passes Jepsen).

Or even use NSQ without spill-over to the disk for small cases. NSQ used to handle 10-30M events per day from thousands of connected devices on a single box (read on [[2017-09-12 real-time analytics|real-time analytics with LMDB, Go and NSQ]]).

Next

Let's validate if this approach actually works.

We'll take a few shortcuts and use clickhouse-local to build a PoC: Analyze website logs with clickhouse-local.

Published: June 13, 2022.

🤗 Check out my newsletter! It is about building products with ChatGPT and LLMs: latest news, technical insights and my journey. Check out it out