Scenario-based Unit Tests for DDD with Event Sourcing

I'm still in the process of learning Domain-Driven Design for CQRS coupled with the Event Sourcing.

One of the things I really like about this approach is the ability to thoroughly and reliably unit test even complex domains. As I've tried yesterday in a learning project, a unit test is basically a simple scenario (txt) file that looks like this:

Given

.ContextCreated

{

"ContextId": "10000000-0000-0000-0000-000000000000",

"Name": "Name",

"Rank": 12

}

When

.RenameContext

{

"ContextId": "10000000-0000-0000-0000-000000000000",

"Name": "New Name"

}

Expect

.ContextRenamed

{

"ContextId": "10000000-0000-0000-0000-000000000000",

"Name": "New Name"

}

Or if we are expecting an exception:

Given

When

.RenameContext

{

"ContextId": "10000000-0000-0000-0000-000000000000",

"Name": "New Name"

}

Expect

.InvalidOperation

{

}

Basically each unit test ensures that:

- Given certain events in the past (they determine state of the Aggregate Root)

- When we call a single command (that's the behavior we are testing)

- Expected outcome is expressed as either 0..N events or an exception. These events are determine both the state of the AR and what is published to the bus (for further consumption by CQRS architecture).

These tests are generated by hand (or from the UI or by recording sessions). You just drop them into the folder of your liking inside the test project (this feels like a reduced dev friction as compared to .NET unit tests). There is even no need to launch Visual Studio and add item references. Build will pick scenarios up automagically via the means of of slightly modified project file:

<Target Name="BeforeBuild">

<CreateItem Include="Scenarios\**\*.txt">

<Output ItemName="EmbeddedResource" TaskParameter="Include" />

</CreateItem>

</Target>

The output will be a nice Project.Tests.Scenarios.dll that contains all the scenarios as embedded resources. Afterwards, you just need to generate tests (one test for each resource). In NUnit you can do something like this (note how Rx makes things so elegantly simple):

[Test, TestCaseSource("LoadScenarios")]

public void Test(Scenario scenario)

{

if (null != scenario.LoadingFailure)

throw scenario.LoadingFailure;

var observer = new Subject<Change>();

var root = new SolutionAggregateRoot(observer.OnNext);

foreach (var @event in scenario.Given)

{

root.Apply(@event);

}

var interesting = new List<Change>();

using (observer.Subscribe(interesting.Add))

{

DomainInvoker.RouteCommandsToDo(root, scenario.When);

}

var actual = interesting.Select(i => i.Event);

ScenarioManager.AssertAreEqual(scenario.Expect, actual, scenario.Description);

}

TestCaseSource is NUnit native attribute that generates a unit test for each argument that is passed by the referenced collection factory. The latter could look like:

public static IEnumerable LoadScenarios()

{

var assembly = Assembly.GetExecutingAssembly();

var clean = "Kensho.Domain.Scenarios.Scenarios.";

foreach (var name in assembly.GetManifestResourceNames().OrderBy(n => n))

{

using (var stream = assembly.GetManifestResourceStream(name))

using (var reader = new StreamReader(stream))

{

Scenario scenario;

var testName = name.Replace(clean, "").Replace(".txt","");

try

{

scenario = ScenarioManager.Parse(reader);

}

catch (Exception ex)

{

scenario = new Scenario {LoadingFailure = ex};

}

var data = new TestCaseData(scenario)

.SetName(testName)

.SetDescription(scenario.Description);

var failure = scenario.Expect.OfType<IFailure>().FirstOrDefault();

if (failure != null)

{

data.Throws(failure.GetExceptionType());

}

yield return data;

}

}

}

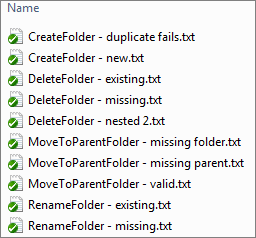

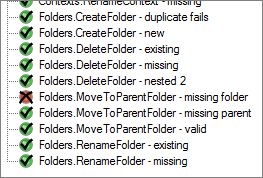

Given all this, a folder like that:

Would be translated into NUnit test suite like this:

I'm still not sure if it is possible to affect NUnit tree structure via the attributes, making it more sensible and clean.

A few notes:

- Latest Resharper does not work properly with these attributes (they probably haven't upgraded to the latest NUnit engine, yet).

- In this case NewtonSoft JSON serializer is used to persist messages for the scenario purposes.

- Adding new tests is really low-friction task. It's easy to have lot's of them or delete unneeded ones.

- Running unit tests is extremely fast and it does not require anything other than the AR assembly and message contracts assembly. Persistence ignorance is at rule here.

- Essentially unit tests form specifications for the domain here. Theoretically they could be captured with the help of UI (when some undesired behavior occurs) by people that are not familiar with the specific programming language. In essence we might be separating domain knowledge from the platform/language used to actually code this AR. This should provide some development parallelization and outsourcing opportunities that compliment native CQRS features in this area.

- AR unit tests in this case are not really fragile (they test only explicit behaviors) and should protect against regressions really well, while allowing to move forward incrementally and reliably with developing AR implementations for complex scenarios (something that I've been stuck with so far).

All in all this experience is just based on my attempt to learn CQRS/DDD/ES by the means of running a learning project. You need to stay ahead of the current development requirements in a fast-paced environment like Lokad. Yet so far this scenario-based approach (inspired by using Fiddler recording sessions to unit test REST APIs) seems to look quite good, despite the fact that it was formalized and implemented just last night.

Yet I'm really interested if there are any ways to improve the experience (esp. reducing friction-per test). So here are a few questions to the readers:

- Does anybody know any simpler serialization format for messages, than JSON?

- How do you test your domains? Are there more simple ways to organize, manage and run tests? What naming and organizing conventions to you use?

- How do you organize your tests (both code-based and scenario-based) and keep them in sync with the big-picture requirement descriptions?

- Any hints on improving this testing experience?

PS: You can check out xLim 4: CQRS in Cloud series for any latest materials on this topic.

Published: September 17, 2010.

🤗 Check out my newsletter! It is about building products with ChatGPT and LLMs: latest news, technical insights and my journey. Check out it out