Importance of Tooling and Statistics in CQRS World

Tooling is extremely important in debugging and managing application. This is especially true for solutions based on Command-Query Responsibility Segregation (CQRS) or any other message-based enterprise architecture.

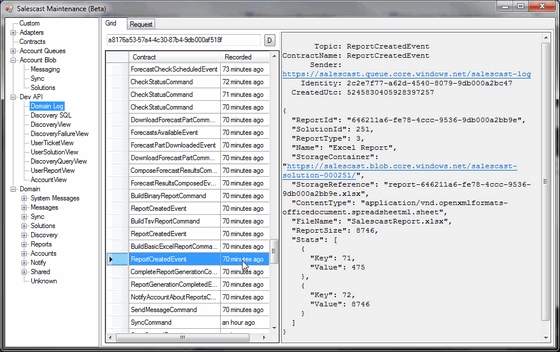

That's how, for example, we approach the problem in Lokad:

Or, if we take a closer look at the event message (rendered from the binary Lokad Message Format into the human-readable text):

Topic: ReportCreatedEvent

ContractName: ReportCreatedEvent

Sender: https://salescast.queue.core.windows.net/salescast-log

Identity: 2c2e7f77-a62d-4540-8079-9db000a2bc47

CreatedUtc: 5245830405928397257

{

"ReportId": "646211a6-fe78-4ccc-9536-9db000a2bb9e",

"SolutionId": 251,

"ReportType": 3,

"Name": "Excel Report",

"StorageContainer": "https://salescast.blob.core.windows.net/salescast-solution-000251/",

"StorageReference": "report-646211a6-fe78-4ccc-9536-9db000a2bb9e.xlsx",

"ContentType": "application/vnd.openxmlformats-officedocument.spreadsheetml.sheet",

"FileName": "SalescastReport.xlsx",

"ReportSize": 8746,

"Stats": [

{

"Key": 71,

"Value": 475

},

{

"Key": 72,

"Value": 8746

}

]

}

Tooling helps us here to:

- better understand and visualize dependencies and interactions within the solution;

- derive custom reports and run queries against the events that happened during the application lifetime;

- debug and troubleshoot potential issues that happened in the past;

- replay commands, script environment and automate certain tasks;

- capture information needed for discovering and eliminating performance bottlenecks (Stats structure holds primary execution statistics associated with the processing of the command message).

The last bullet-point actually replies to the question I've promised to answer a long time ago - on tracking statistics related to the message lifecycle as it passes through the system.

So we manually capture the necessary statistics in the context of the message and append them to the appropriate events. Statistics could include things like:

- Number of records processed;

- CPU resources used;

- Upload/download speed;

- Merge-diff statistics.

- Etc

These statistics might not make a lot of sense on their own. For example, download speed means different things, when we are retrieving information from SQL Azure in North-Europe or mySQL database on shared hosting in Moscow. That's why we are capturing and persisting them along with the domain events, which are bound to the time-line and provide context of the situation.

When the event is consumed by some component (i.e.: to update denormalized UI View) we would probably ignore these statistics - they will play out later..

Events are stored in the domain log available for the queries a la Time Machine. Then, when we need to actually figure out some performance bottle-neck or understand specifics of some situation, it'll be just a matter of doing event stream analysis (there's plenty of literature on that one already) and writing proper queries.

Once we have statistics in the context of actual domain events, nothing prevents us from getting answers to the questions like:

- What's the average item retrieval speed from mySQL databases? How often do we encounter timeouts and deadlocks?

- How many seconds does it take to sync 100k products from SQL Azure database in the same data-center?

- What's the average upload speed to Lokad Forecasting API for datasets larger than 10k series, after that API upgrade in the last iteration?

Theoretically, if we combine these statistics with Time Machine Queries (or continuous queries) and real-time logging - we should be able to do some nice things with CQRS solutions:

- Monitor health state and attach notifications to the key performance indicators of our distributed solutions.

- Detect potential problems in real-time or even ahead of time (if coupled with the forecasting).

- Analyze the real impact of performance optimizations.

All in all this helps to better understand the realm of the solution, as it evolves in ever changing reality. This allows to reduce resources (developers, time and budget) required for the delivery, while keeping high quality of project deliverables.

This article is a part of xLim 4 Body of Knowledge. You are welcome to subscribe to the updates and leave any feedback!

Published: July 11, 2010.

🤗 Check out my newsletter! It is about building products with ChatGPT and LLMs: latest news, technical insights and my journey. Check out it out